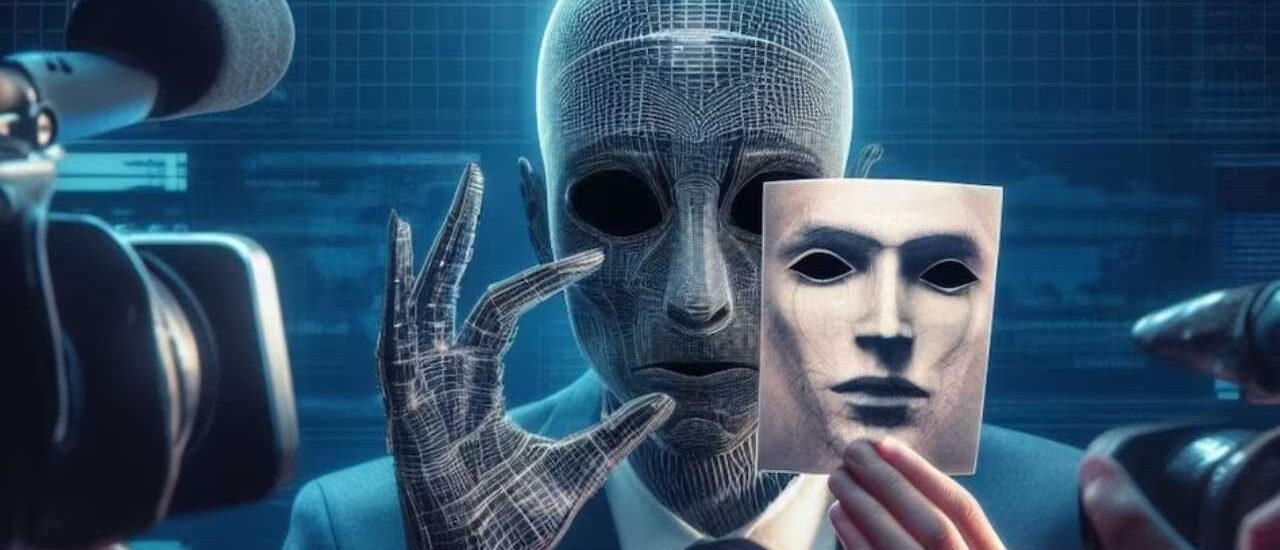

Emerging in 2025, deepfake scams powered by AI-generated audio and video are escalating worldwide, targeting individuals, organizations, and entire industries. From vishing calls mimicking loved ones to fraudulent video calls in recruitment, the threat landscape is evolving rapidly.

What’s Driving the Surge?

- Advanced AI tools can now recreate realistic voices and faces with just seconds of authentic audio or video.

- Attackers use social media leaks and public data to craft hyper-personalized scams that bypass traditional filters.

- Financial fraud is booming, with high-profile deepfake cases resulting in millions lost in a single transaction.

- Cybersecurity reports show a dramatic rise in voice phishing and AI-generated scams.

Timeline of Key Incidents

2023: Early deepfake voice scams caused major corporate fund transfers based on spoofed executive calls.

2024: Deepfake identity fraud surged globally, with major incidents reported across sectors.

Early 2025: Voice phishing attacks rose significantly, especially during tax and holiday seasons.

May 2025: A journalist successfully fooled a bank using a cloned voice, highlighting the technology’s power and risks.

Sector-Wide Impact

- Financial Services: Projected to lose billions annually due to increasingly convincing AI-powered scams.

- Cryptocurrency Platforms: Become prime targets for identity-based fraud using manipulated media.

- Recruitment & HR: Fake candidate interviews using AI avatars raise concerns about verification and hiring integrity.

- Identity Verification: Traditional biometric and digital onboarding systems are being tested like never before.

Cybersecurity Response & Mitigation

- Adopt multi-factor authentication and avoid relying solely on voice or video for identity verification.

- Train employees to recognize AI-generated content and implement regular security drills.

- Invest in emerging detection tools that analyze metadata, visual inconsistencies, and voice anomalies.

- Update regulatory compliance to include synthetic media threats and forensic review processes.

Alert: Many deepfake detection tools are struggling to keep up with the rapid evolution of generative AI, leaving organizations vulnerable to advanced fraud techniques.

Conclusion

Deepfake scams have transitioned from experimental novelties to serious threats by mid-2025. The biggest challenge lies not in the technology itself, but in how it undermines trust—between colleagues, family members, and institutions.

Countering this crisis will require a global effort, blending technological innovation, stronger regulation, and widespread education. Only through collective vigilance can we safeguard truth in an era of synthetic reality.